The lately launched public demo of Fb father or mother Meta’s synthetic intelligence (AI) language mannequin has been taken down after scientists and lecturers confirmed it was producing a considerable amount of false and deceptive info, together with quotes from actual authors. for jobs that did not exist, whereas filtering out complete search classes.

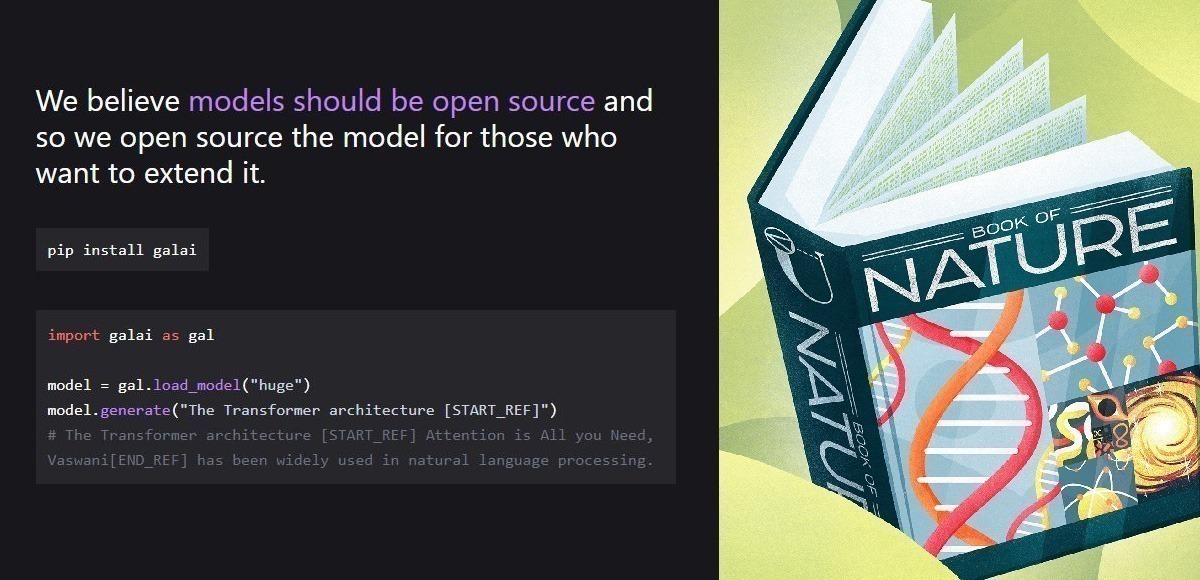

Galactica (GAL) is described by the corporate as an AI language mannequin that “can retailer, mix, and cause about scientific information” — fixing equations, predicting citations, summarizing analysis articles, and a number of different helpful scientific duties. Nonetheless, producing deceptive or just fallacious texts from this materials, as defined by the director of the Max Planck Institute for Clever Programs, citing a number of instances in a thread on Twitter after attempting the instrument that was solely out there for sooner or later.

“In each case it was fallacious or biased, nevertheless it sounded appropriate and credible,” wrote Michael Black. “I believe that is harmful.”

I requested #Galactica about some issues I find out about and I am troubled. In all instances, it was fallacious or biased however sounded proper and authoritative. I believe it is harmful. Listed here are a couple of of my experiments and my evaluation of my considerations. (1/9)

—Michael Black (@Michael_J_Black) November 17, 2022

Though the AI had important reliability and credible articles, they weren’t supported by actual scientific analysis. Galactica even cited the names of actual authors, however with notes of hyperlinks to non-existent GitHub repositories, in addition to analysis papers.

Others identified that the mannequin didn’t return outcomes for a variety of search subjects, seemingly due to Galactica’s automated filters. College of Washington pc science researcher Willie Agnew additionally famous that when querying subjects like “queer idea,” “racism,” and “AIDS,” the AI returned no outcomes.

Refuses to say something about queer idea, CRT, racism, or AIDS, regardless of massive our bodies of extremely influential papers in these areas. It took me *5 minutes* to search out this. It’s apparent they did not have even essentially the most fundamental ethics evaluate earlier than public launch. Lazy, negligent, unsafe. https://t.co/zKbSPdIN0I pic.twitter.com/DTQjtn2P21

— Willie Agnew (@willie_agnew) November 16, 2022

Picture: Tecmasters/Galactica/Meta

With so many unfavorable feedbacks, Meta withdrew the general public demo of GAL within the early morning of Thursday (17). THE Motherboardthe corporate despatched a press release in response to the feedback, launched by way of Papers With Code, the venture answerable for the system.

“We recognize the suggestions we have acquired so removed from the neighborhood, and have paused the demo for now,” the corporate wrote on Twitter. “Our fashions can be found to researchers who need to be taught extra in regards to the work and reproduce the leads to the paper.”

Thanks everybody for attempting the Galactica mannequin demo. We recognize the suggestions now we have acquired so removed from the neighborhood, and have paused the demo for now. Our fashions can be found for researchers who need to be taught extra in regards to the work and reproduce leads to the paper.

— Papers with Code (@paperswithcode) November 17, 2022

Some Meta officers additionally weighed in on the choice, suggesting the AI was taken offline in response to the criticism. “The Galactica demo is offline for now,” tweeted Yann LeCun, Meta’s chief AI scientist. “It is now not doable to have enjoyable casually utilizing it the fallacious approach.” Glad?”

Galactica demo is offline for now.

It is now not doable to have some enjoyable by casually misusing it.

Glad? https://t.co/K56r2LpvFD— Yann LeCun (@ylecun) November 17, 2022

Galactica will not be the primary Meta AI with critical issues

The current AI mannequin will not be the primary one launched by Meta with bias points. This 12 months alone, two makes an attempt have been launched with robust biases. In Might, the corporate shared a demo of a big language mannequin known as OPT-175B, which researchers stated had a “excessive propensity” for racism and prejudice, just like the GPT-3 by OpenAI, an organization co-founded by Elon Musk; extra lately, it was the flip of the chatbot BlenderBot, which made “offensive and false” claims, whereas speaking by way of surprisingly unnatural conversations.

Just like the examples cited, Galactica can also be a language mannequin, a sort of machine studying mannequin identified for producing textual content that appears prefer it was written by people from analyzing massive volumes of knowledge to establish patterns or make predictions.

Once we use "security filters" that censor content material with a broad brush, we create a much less secure world: marginalizing massive swaths of the inhabitants, erasing crucial scholarship. https://t.co/1MJoJDZr11

—MMitchell (@mmitchell_ai) November 17, 2022

Though spectacular, the outcomes of those programs are an instance that the flexibility to provide content material with credible human language doesn’t imply that programs like Galactica truly perceive it. As these programs turn out to be extra advanced, and people much less capable of perceive them, There’s a rising concern amongst researchers within the space about using massive language fashions directed to any exercise that entails decision-making, declaring the impossibility of auditing them.

This turns into an issue particularly on the subject of scientific analysis. Whereas text-generating AI programs clearly don’t perceive what they produce, it’s dangerous to deal with such outcomes as scientific works, that are grounded in rigorous methodologists.

Picture: Ociacia/Shutterstok.com

Like different researchers, Black is understandably involved in regards to the penalties of releasing a system like Galactica’s, referring to what “may usher in an period of profound scientific falsifications.”

“She provides credible sounding science that’s not grounded within the scientific methodology,” Black wrote in a tweet. “It produces pseudoscience based mostly on the statistical properties of *written* science. Writing grammatical science will not be the identical as doing science.” However it is going to be tough to tell apart it”.

The post Public demo of AI with ‘scientific information’ of Meta is taken down after criticism first appeared on 64bitgamer.

https://ift.tt/L5PN7qX

Comentarios

Publicar un comentario